The Convergence of AI and Blockchain Technology: The Projects Shaping the Future

23 July 2024•

Blockchain and artificial intelligence, two of the most transformative technologies of our time, are coming together to tackle critical challenges and amplify each other’s strengths. This article spotlights projects such as Akash, Gensyn, and Bittensor.

Case Study 1: Akash Network

Akash Network is a prominent project in decentralized cloud computing. The network’s native token, AKT, is multifunctional, supporting network security, facilitating payments, and encouraging user engagement. Launched in 2020, Akash’s first mainnet introduced a permissionless marketplace for cloud computing, initially offering services like storage and CPU leasing. In 2023, Akash broadened its offerings by a GPU mainnet that enables users to lease GPUs affordably for AI training and inference tasks, presenting a cost-effective alternative to traditional centralized services.

Previously: The Convergence of AI and Blockchain Technology: Overview

Within the Akash ecosystem, the primary stakeholders are the Tenants and the Providers. Tenants, who are in need of computational resources, and Providers, who supply these resources, interact through a reverse auction system. Tenants detail their specific computational needs, including server locations and types of hardware required, while Providers submit competitive bids. The contracts are awarded to the lowest bidder, ensuring an efficient allocation of resources.

Despite the promising initial uptake, Akash continues to explore strategies to fine-tune the balance between supply and demand and to attract new users. These efforts are crucial for sustaining growth and broadening the reach of decentralized computing solutions, making advanced computational resources more accessible across various sectors.

Case Study 2: Gensyn

Gensyn operates on the cutting edge of decentralized computing, specifically targeting machine learning model training. With a mission statement of “coordinating electricity and hardware to build collective intelligence,” Gensyn embodies the principle that anyone, anywhere should have the ability to participate in ML training. This platform aims to democratize access to computational resources, making ML training more accessible and affordable compared to traditional centralized platforms.

How Gensyn Works

Gensyn’s network is structured around four key roles: submitters, solvers, verifiers, and whistleblowers.

- Submitters: Individuals or entities that have models they wish to train. They submit tasks to the Gensyn network, including the training objectives, the specific model to be trained, and the necessary training data. Submitters also pre-pay an estimated fee for the compute resources they will require, which are provided by the solvers.

- Solvers: These are the providers of computational power. They accept tasks from submitters, perform the required training, and then submit evidence of their work to the network.

- Verifiers: Tasked with ensuring the integrity and accuracy of the training conducted by solvers. They review the completed tasks to confirm that they meet the specified training criteria.

- Whistleblowers: They play a crucial role in maintaining the network’s integrity by checking that verifiers are performing their duties honestly. Gensyn incentivizes whistleblowers with rewards for identifying and reporting any discrepancies or misconduct.

One of Gensyn’s most notable innovations is its verification system, which includes:

- Probabilistic Proof-of-Learning: Allows for the probabilistic validation of the training process without the need for complete re-execution.

- Graph-based Pinpoint Protocol: Enables pinpointing errors in the training process through a structured verification framework.

- Truebit-style Incentive Games: Encourages honest participation by offering incentives for detecting faults in the verification process.

This multi-faceted approach not only ensures the accuracy of the training results but also significantly reduces the costs and inefficiencies associated with traditional verification methods.

Cost Effectiveness and Market Comparison

Gensyn claims to provide machine learning training services at up to 80% lower costs than centralized alternatives like AWS, outcompeting other decentralized projects like Truebit in efficiency tests. This cost effectiveness is achieved by harnessing underutilized computational resources from a diverse array of providers, ranging from small data centers to retail users.

Challenges and Future Directions

While Gensyn’s decentralized approach offers significant benefits, it also presents challenges, primarily related to the heterogeneity of the computational resources and the increased costs of coordinating such a diverse network. The latency and bandwidth costs are inherently higher when the compute resources are spread across the globe.

Despite these challenges, Gensyn remains a compelling proof of concept for what the future of decentralized ML training might look like. The platform has not fully launched yet but continues to develop its infrastructure and refine its protocols, aiming to transform how machine learning training is conducted worldwide.

Case Study 3: Bittensor

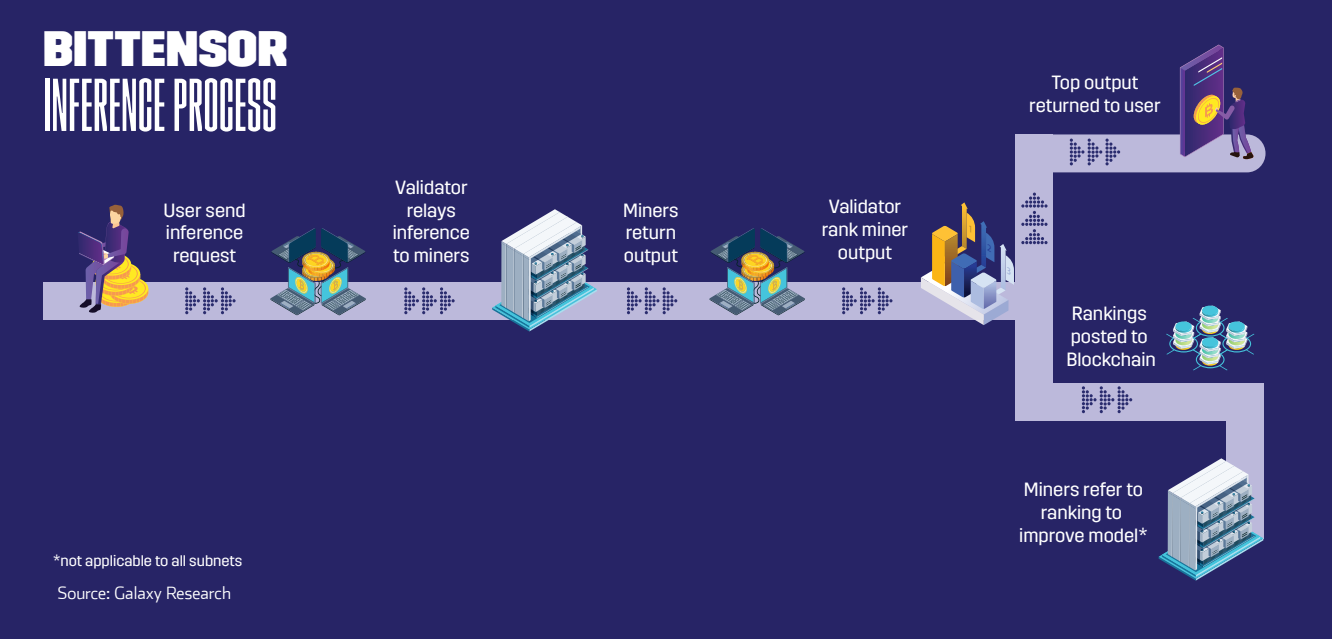

Bittensor is a decentralized compute protocol built on the Substrate framework, launched in 2021 to transform artificial intelligence into a collaborative effort. Inspired by Bitcoin, it features a native currency, TAO, with a capped supply of twenty-one million and a fouryear halving cycle. Unlike traditional Proof of Work systems, Bittensor utilizes a novel “Proof of Intelligence” mechanism, where participants, called miners, respond to inference requests with AI model outputs.

Artificial General Intelligence (AGI) - The case of Bittensor

The Bittensor network comprises two main roles:

- Validators: They send inference requests to miners and assess the responses based on quality, awarding “vtrust” scores that determine how much TAO miners earn. These scores incentivize validators to accurately and consistently evaluate the outputs.

- Miners (Servers): These participants run the AI models and generate outputs. They compete to provide the most accurate responses to earn TAO rewards. Their outputs could potentially be trained on platforms like Gensyn, enhancing their effectiveness and reward potential.

The interaction between validators and miners forms the Yuma Consensus, encouraging the production of highquality AI outputs and accurate evaluations. As miners improve and the network’s intelligence grows, Bittensor plans to expand by introducing an application layer. This layer will allow developers to build applications directly querying the Bittensor network.

Since October 2023, Bittensor has launched over 32 subnets, each designed to incentivize specific behaviors, such as text prompting or image generation. These subnets are poised to support a variety of applications, facilitating the integration of decentralized AI into broader tech ecosystems.

Glossary

|

Substrate Framework: |

Provides a flexible and extensible set of tools that allow developers to create customized blockchains with pre-built modules or by writing their own custom logic such as networking, consensus, and transaction processing. |

|

Yuma Consensus: |

Consensus mechanism used in blockchain networks that emphasizes efficiency and scalability. It aims to achieve high throughput and low latency in transaction processing while maintaining security and decentralization |

|

Subnets (short for “subnetworks”): |

Smaller, independent networks within a larger blockchain ecosystem. They can operate with their own consensus mechanisms, rules, and governance structures while still being part of the overarching network. |

|

zkML (Zero-Knowledge Machine Learning): |

Method of applying zero-knowledge proofs (ZK proofs) to machine learning processes. ZK proofs are cryptographic techniques that allow one party (the prover) to demonstrate to another party (the verifier) that they know a specific piece of information without revealing the information itself. In zkML, these proofs ensure that machine learning models can be trained and used without exposing sensitive data. |

To learn more about the innovations driving blockchain forward – read the full report here.

Next Read: Fetch AI: Redefining the Digital Economy through Decentralized AI Agents and Blockchain Integration

%2Fuploads%2Finnovations-blockchain%2Fcover22.jpg&w=3840&q=75)